Next: Example Phonem Recognition Up: Simple Evolving Connectionis Systems Previous: Update of Incoming Weights Contents

| (8.11) | |||

| (8.12) | |||

| (8.13) | |||

| (8.14) | |||

|

(8.15) |

The weight vector ![]() at

at ![]() is the result of the summation of the weight vector

is the result of the summation of the weight vector ![]() at

at ![]() and the product of the learning constant

and the product of the learning constant ![]() with the activation value of the evolving neuron

with the activation value of the evolving neuron ![]() and the error vector

and the error vector ![]() . The calculated output vector

. The calculated output vector ![]() contains all the output activations

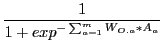

contains all the output activations ![]() . The output activation is in the cited papers of Watts and Kasabov not clearly defined. We are using here a sigmoid function which takes as exponent the sum of the product of the weight vector of all weights connected to an output neuron

. The output activation is in the cited papers of Watts and Kasabov not clearly defined. We are using here a sigmoid function which takes as exponent the sum of the product of the weight vector of all weights connected to an output neuron ![]() from

from ![]() connected evolving neurons, where each sending neuron has the activity

connected evolving neurons, where each sending neuron has the activity ![]() . Additionally one has to use some threshold if the gvalues of

. Additionally one has to use some threshold if the gvalues of ![]() are all binary.

are all binary.

A more detailed description of the SECoS as well as discussion of its properties can be found in the application examples below.

Gerd Doeben-Henisch 2012-03-31